Pathfinding Part ONE

- Oct 26, 2021

- 14 min read

Updated: Nov 29, 2022

Hi, Badump here.

About pathfinding…

Didn't I promise that like a month or 2 ago… anyways here it cometh.

Introduction, goals, and problems

Traditional methods comparisons

First try

Implementation

Surprising problems

Performance

Take two

The idea

What we kept

How the surprising problems were solved

Formations

Goals.

Implementation

Problems

Obstacle avoidance(How it forced pathfinding to change)

Unit movement

Goals

Implementation

Bandwidth considerations

Clusters

Feels like choose 2 of 3 scenario

Overall performance, and where are the issues

Organization

Introduction, goals, and problems

As everyone probably knows by now, Sanctuary aims to be playable at scales of thousands to tens of thousands of units, being able to run smoothly won't mean anything if when you give orders to units they form a train so long that you think we are on a ringworld, not a Dyson sphere. So what can we do? Make the units move in a blob? Without any other rules, it is ineffective.

So units must also have at least some basic organization so that in case your artillery/shields are faster than your tanks they don't single-file to their doom. So in general that part is gonna be handled by the formation code.

Now, when it comes to how units move, we have a lot of different behaviors to introduce, from hover tanks that can go over water, to dive bombers, and anything in between. Quite a lot of things to be honest.

And the actual pathfinding code must be able to handle large maps and tens of thousands of units. The issue is that all of these conditions must be met simultaneously. Not to mention the fact that the pathfinding must actually work in tandem with the other 2 features, all of which add to the complexity of the challenge.

And this last nail in the coffin is the reason why we couldn't just plop in some existing solution.

Traditional methods comparisons

So when you say pathfinding, what comes to mind first? A*? Flowfields?

That paper from developers of Castle Story about Hierarchical pathfinding that you can find https://www.gdcvault.com/play/1025320/Hierarchical-Dynamic-Pathfinding-for-Large ? (I am going to refer to it as Hpa*)

No matter what you guessed, what comes to mind is at least a path between A and B that avoids obstacles. And without going into details on how it works, the most popular algorithm for doing that exact thing is A*. It is reasonably efficient but unfortunately has a nasty thing about having cases where the performance goes down as the square of the map size. So if the map is 5x5 let's say the performance loss is "25", but if the map is 40x40, then the performance loss is "1600".

The performance loss is our imaginary unit that is inversely proportional to framerate

We have another issue on our hands; the issue that unit counts tend to go up as the map size increases. So Having 2 players each with 50 units on 5x5 map results in 2 * 50 * 25 = 2500. And having 16 players on 40x40 each with 500 units results in, 1600 * 10 * 500 = 8 000 000. As you can see the lag tends to go up very very fast as the scale goes up.

Now let's see how we can fight that problem. There are 3 obvious solutions.

Reduce the computational cost of each calculation(Aka we speed up everything by 10x)

Reduce unit and/or player count

Have smaller maps.

Now let's go over each one.

The issue with the first solution is that even if we make it 10x as fast, 8 000 000 / 10 = 800 000, the computational cost would still be way too great. We need to make it another 100x faster so it can be in the same order of magnitude as the first example.

The issue with the second solution is that it goes against the whole point of Sanctuary, while we aren’t aiming for “scale above all”, we are also not aiming for gameplay consisting exclusively of micromanagement.

And the issue with the third solution is that it is actually the biggest driver of performance issues. So reducing the map size would be the most performance-improving feature. But you cannot really have a lot of units in small maps, therefore it isn't suited to our use case.

Anyways it seems we need a solution that combines the advantages of 2 and 3 but without actually paying the price. Now, what could help us do that?

Let's start with the aforementioned flowfields. What are they? And how they help.

Well as the name suggests, they are fields, more specifically fields that span the whole map.

As you can see on the images, the flow field creates arrows that point to the most optimal path to the target location, no matter where you are. So imagine that you are a player in a labyrinth, and on the floor, there are flow field arrows. As long as you follow them, you will always reach the destination.

Unless there are no arrows, which is what happens when there is no path to the destination

You should now have an idea of the way it works. The question now is: "what are its performance implications?" Well, remember how in our previous example, each unit must calculate its own path to the goal? Well, that is no more. Now each unit has a constant cost of just looking up the flow field at its current position. Meaning that there is practically no cost to actually having more units. So you can effectively have an unlimited amount of units in the game and not see any performance drop due to pathfinding.

Sounds amazing! Let's get started... Not so fast! There is something I haven’t yet told you about flowfields, that might change what calculations we need to do. Remember the original calculations were total units * map size squared.

Here is a question: where is each unit going? What is their target location? Remember how I said that flowfields are leading toward a single location. Well, what happens if you give 2 units different positions to go toward. What if you gave each of those units a different target location. Well in that case you are doing no better than A*, and in fact much worse, because flowfields are slower than A* for a single unit.

Realistically we did massively reduce our effective unit count for the purpose of the calculations but it is not exactly 1, not it is small enough to be negligible. Especially when AI gets involved, with its 100000 APM. Anyways we can’t really do much more on reducing per unit cost, so let’s try the other approach, and see what it does for us.

And neither solution is any good for answering whether I can reach the target at all. Both will waste time, even if a unit can in no way reach the target location. Imagine islands.

Hierarchical pathfinding (I recommend reading the presentation, it is really good!). Come back here when you are done.

And in case you need a TLDR:

The goal of Hpa* is to allow having big worlds, like sizes so big, that you won’t even have the time to worry about pathfinding for more than 1 unit, and handle terrain that is constantly changing.

Now that I said changing terrain, how do you think the aforementioned methods would handle building a wall or plopping a big factory, and blocking unit paths? (hint they don't)

Well here comes Hpa* to save us. Because of the way that the map is preprocessed it allows us to only update the parts of the map that were changed, and each time we query the pathfinding we get an up-to-date path. Which is a big improvement over A*, now instead of having to path over the whole map. we only need to path over small segments and reach the destination.

Well, unfortunately, is that while it helps a lot computing less, the computations we do are much more expensive, so Hpa* only pays off on big maps. The smaller the map is the worse it performs. And the cost is constant over the entire time a unit is pathfinding. That is not exactly good, but not too bad either. Still, pathfinding for 10s of thousands of units gets expensive. Anyways I did start with it as the first take on pathfinding so let's see what happened.

First try

Implementation

Well, the first thing that we need to do is to create the navmesh. As the paper earlier described, it has hierarchies. So how do we code that? Well, let's start from the very bottom.

The first layer is simply taking the terrain and making it into nodes. Then each 4x4 square is grouped into another higher-level node, and again.

(1)

The size of 4 is arbitrary, it can easily be changed. (Arbitrary now, it matters a lot later on)

Now we need to add some obstacles.

(2)

So now we need to find how the nodes would be built on the first layer

(3)

The different colors signify different areas, you cannot go from green to red area or vice versa. But this is only layer 1. Obviously, there is no obstacle between the left and right cells. So let's move on to the next layer

(4)

So basically we only need to see if our current position and destination are the same “color”

If you are wondering why red and green got switched, it is to demonstrate that when 2 cells meet they agree on color so to say.

Ok here is the more technical explanation. For each cell of the first level, we make a list of neighbors, so the smallest squares have only 4 or less, top, left, right, bottom. And we can actually skip doing that as we can always look it up. But imagine we have a list of them for now. For the larger nodes, we cannot skip that. And of course, if the square is blocked, then it is not a neighbor. Nodes with 0 neighbors are totally fine. After all, the highest level node is gonna be such.

Moving on, we do something sort of “filling” the square, and all nodes that were filled, create a new higher-level node. In the 3d pic. All the green squares form a new higher-level node. And the red one too. Now those nodes can only contain squares that are of the same base node.

Ok let’s get some terminology:

Base node: This is the whole square, it contains multiple nodes of a lower level, and also multiple nodes of the same level.

Node: It is the area covered by the red/green color. For example, the lv2 node is the red and green total area in picture 3. A level 3 node would cover all the green and red areas in picture 4, in total there are 2 lv3 nodes in picture 4, and 3 lv2 nodes in picture 3.

Ok now let’s define neighbors. That is every same level node that is a parent of the neighbors of the children. Ok here is easier to think example:

My friends are the parents of the friends of my children (Assuming children have only friends at their age). Meaning I am not friends with the children themselves, also not friends of myself.

And obviously, the children must have only 1 parent. Otherwise, it is a bug (Or the parents' muse fuse).

Imagine fighting for custody and the judge gives you Potara earrings lol.

Moving on, when you create all lv2 nodes from the lv1 nodes. You go higher up. Now you do the same process with lv3 nodes to create them from lv2 nodes. Until you reach the highest possible node. Said limit is predefined at the start. Now let's see how that would make finding if you can get from a to b trivial. The answer is simply to check the parent until a common parent is found. If you find one, then good, a path exists. If you don't, well it means there is no possible path. This makes traversing the tree pretty much instantaneous. Or O(log n) compared to O(n^2) to find a path. In numbers, let's say the map is 5000 units wide. With a node size of 4, the Hpa* is around 4 million times faster. Now let’s increase the map size 10x, and make the Hpa* nodes 8 instead of 4. Now it is around half a billion times faster. Now, this is only for finding if a path exists. Not actual pathfinding costs.

Now about the actual traversal, to start we need to find the common parent node, the closer the better. Afterward, we need to find a path from the child of the said node that we are in, to the parent node we want to go to. This is slightly easier for when the size is 2 instead of 4 or more, because you only need to say left/right/up/down, otherwise you actually need to find a path. But that is not that bad as you are pathing on a tiny grid, except you do it for each level. So there also must be some balance between node size and times you need to travel.

For example a 1024x1024 map is gonna have log2(1024) = 10 layers to traverse. But when the node size is 4 then there are gonna be log4(1024) = 5 layers. Making it even bigger for example 16 is gonna yield 2.5, but we cant have 0.5 layers, so we round up to 3. That would make the minimal size of 4096. Which is also 3.

Unfortunately, the traversal, while not expensive, is also not exactly dirt cheap, even on my pc, I struggled to get running smoothly more than 20 000 units at once using that approach. While some optimizations could push it higher, it is still not good enough, also other issues.

Surprising Issues.

One of the issues is that it forms a hierarchy but you only get to know the next big node, which is kinda an issue when you need some more accurate solution. Here in this example, we need a path from green to red. Here is what it would look like at the top level.

As you can see the path you will get is black, while purple is more accurate. Note that we are still talking about lv2 paths, meaning paths between lv2 nodes inside a common lv3 parent.

Smoothing that path is gonna be quite a bit of a headache. As it is not exactly trivial.

Also, another problem here are some pics.

The black is what we would get as usual. Because we are told to go to the right now, the shortest path is right ahead.

Purple is what it would be if we had instead told it to go to the bottom right node(Still in the same parent node). But for that, we would need to know where the goal is ahead of time, in the next node.

And orange is how we could have smoothed the path.

Now you could ask, why don't we smoothen the black one? Well, the thing is we only know the first part of the black path, when it is executed. So until we cross the thick gray line, we have no idea where we should go. We could mitigate that problem by calculating in advance, but the thing is, that the cost increases by the number of precalculations. So the further you go the more expensive it is. Now we can mitigate that somewhat by caching, but the more you precalculate the more prone it is to break when the path actually needs to change. Like for example a wall being built. So it is best to keep it as short as possible. And this doesn’t work on long paths. Like if there are any walls or detours from the perfect path, then it becomes near impossible to make it pick well.

Performance

The cost of the algorithm is around log(mapSize) * unit count. And unfortunately as mentioned earlier it is not exactly cheap. While good for smaller scales in 100s of units, it becomes a major bottleneck when you multiply scale by thousands. Still, it is a major improvement over A* per unit cost and doesn't create lag spikes like flowfields do, especially when you have multiple targets for different units.

Take Two

Hmm, wouldn't it be nice to have a pathfinding algorithm that works well for big maps and has no or trivial cost per unit…

That would really be nice.

The idea

You could have probably seen it from the orbit of Pluto, but what we do is combine flowfields and hierarchical structures. We take the hierarchy part that allows us near instantaneous calculation per unit and add flowfields to it, so every unit can share the same path. Altho in the end, we do kinda end up butchering a major part of the hierarchy, as long as we don’t crank up the map size to 11(I am talking sizes like the surface of the earth, not some puny 40x40km map), it should perform well enough. And if we do, then we need to think a little bit harder.

Of course, those 2 concepts don’t exactly play nice with one another. The next step is to figure out what exactly we need and what we don't need. Having the more stuff our algorithm doesn’t need to be able or be good at, makes creating a very optimized algorithm much easier. Alternatively what optimizations can we apply and would they break anything important?

Well, let's start thinking about how we can start mixing both ideas. One way would be to increase cell size and perform flowfields instead of A* and cache that. And while not a bad solution, and allows us to reuse a lot of the previous calculations, it still technically involves generating a flow field over a large portion of the map. Worst case, you order a circle of units around the border of the map, to converge to the center. They would all end up generating a flow field over the whole map, albeit it would be distributed over time. And also that it would generate many layers of it, meaning it would be more expensive than just doing an ordinary flowfield. Nevertheless, it seems like a viable solution. But it would not generate a very high-quality flow field anyway, and we can do better. If the flow field is not gonna be perfect then we can pull some tricks out.

What if we can cache or precalculate parts?

Well, that is already what hierarchical does, but we want to go even further beyond. Especially having 0 run time cost would be nice. But looking at it through a hierarchical perspective doesn’t help us much. Let's switch to a more flow field-centric approach.

Instead of trying to add flowfields to hierarchical, we add hierarchical to flowfields.

Now at first look, flow fields can't really be cached, can they? Well, we can keep every order ever issued, but that is gonna only start being useful after 100s of thousands of commands have been issued. A 512*512 map is 260k. Anything bigger is gonna make caching that impossible. Also, that is gonna kill our ram, we are gonna need to use tape storage to cache it. (Magnetic tape is surprisingly still used, google it). So caching all orders is not gonna be possible, then what about caching small parts of it, and somehow using that later on.

But what exactly do we cache?

Well, let's think about hierarchy for a second. Now back to flowfields. How can we somehow leverage hierarchy to construct flowfields? Well… Let's go back, we already have a sorta navmesh constructed by the hierarchical pathfinding. It contains the nodes, base nodes, parents, etc… We can use it.

What we kept

Continuing, we decide to use the navmesh, but not really the pathfinding algorithm itself that was left over. Now we need to construct an alternative way of pathfinding. That in the end looks like a flowfield.

Let's make a statement and see how we can use it:

Flowfields with centers near one another are pretty much the same when you get further away from them.

Here are some images that illustrate that. All 4 have origins that are different.

(I wrote the program to make those flow fields images, and the source code is on Patreon, perfect if anyone wants to experiment with their flowfields methods.)

Do you see how the bottom, side always remains mostly the same?

It might on average be slightly brighter or darker, and the start of the bridge might look different but toward the end and the other land, it looks exactly the same.

This means that each time we calculate a flowfield, it is kinda wasteful. Like moving the target position by 1 unit, creates a flow field that is 99% the same, but still totally new.

Now there are few ways to remedy that, but we gotta take one other feature into account. That terrain might change, and areas that were pathable might no longer be. We really don’t want to recalculate each order, that would be very bad performance-wise.

So even if we optimize and cache flowfields, it would still not be good enough when a wall gets plopped. But that is the special magic of hierarchy, so we add it right now.

We use the old layers, but frankly, we only need the first 2. So now we have the terrain into chunks of some size that all neighbors know. Well… We calculate the flowfields between neighbors. And then at the next layer, we calculate the flowfield again, but this time, the whole area is tiny, 512x512 with node size of 32 resulting in 16x16 total of 256 nodes. The performance cost at the beginning is the same as having to calculate 8 flowfields over the whole map. Which is not too bad, not really significant.

Of course, this approach ends up causing quite a few difficulties, and calculating the connecting flowfields is kinda complicated. Especially when you factor in how units move, and formations. These two elements complicate pathfinding a lot.

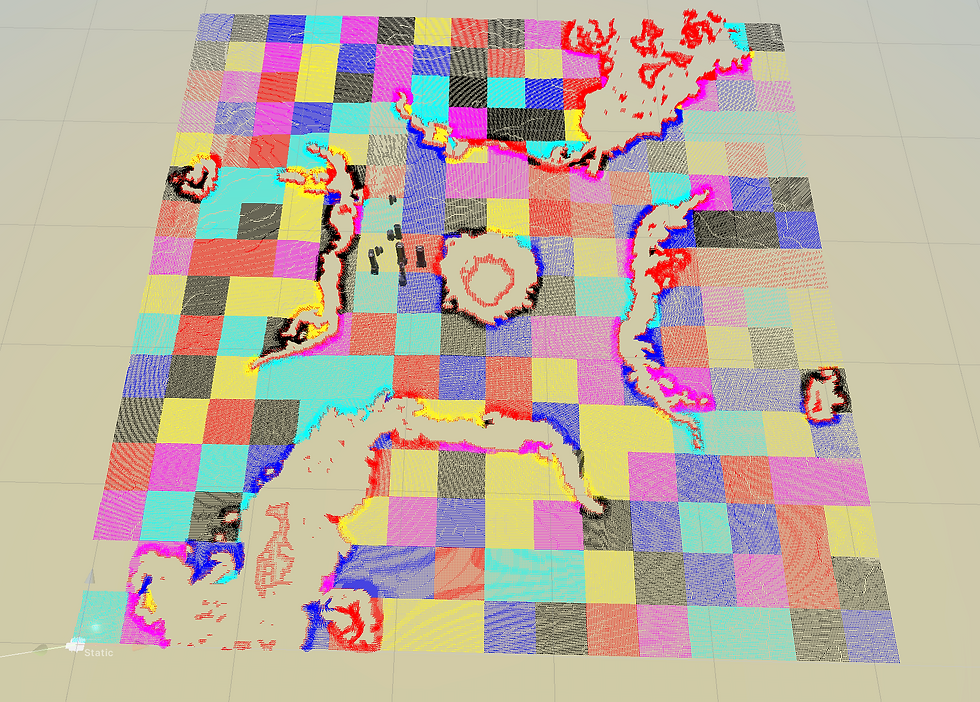

Here are a few pictures of the final flowfields, with different node levels.

Obviously the smaller the node is the more accurate it becomes, but I found the balance to be around 16 or 32.

Challenges

The first few tests showed the pathfinding to work reasonably well, but it had some issues.

Like units liking to wall hug too much, making any large groups not perform well.

Then there were the gaps in the walls. That was “technically” pathable terrain but in practice bad. And in general lots of tweaks to how the navmesh itself was generated, that massively improved the pathfinding. There is also a need to prevent steep changes in the pathfinding, mainly to prevent unit wobbling, and add lots of small and not so small features in the flowfields themselves, to make them more unit friendly.

Anyway another big wall of text is coming next week so stay tuned

Comments